Storage arrays and hardware

Storage arrays and hardware can be delivered with various storage, transport/link protocols. When building a fabric consisting of the dual HA DDP Head and storage arrays and or hardware, the requirement is that A/V FS must be able to communicate with these to become aware of their LUN’s.

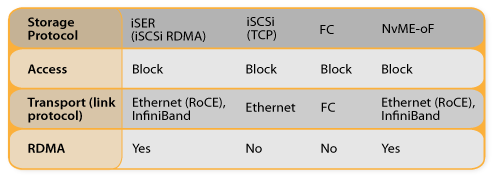

See the table for the block based storage and transport/link protocols. A/V FS is HA for the metadata. For high availability for data paths multi path support is included in the A/V FS driver. Storage arrays from Infortrend, Seagate and the big four are real HA due to their write cache synchronisation. These can be connected via Multi path.

A/V FS/iSCSI/Ethernet

This protocol combination is available up to 200 Gb/s bandwidth

A/V FS/FC

Fibrechannel does not need an explanation

A/V FS/NVME-oF/RoCEv2

NVME-oF over RoCEv2 explained

A/V FS/NVME-oF/InfiniBand

NVME-oF over InfiniBand explained

Standard & extreme performance integrated

Not all servers, workstations or desktops may need extreme performance

East-West Communication/data transfer

About data tiering and Project Caching